Outsourcing a metabolomics analysis service or a metabolomic profiling service should result in a reproducible, traceable, and reanalysis-ready deliverables package—not just a few plots. Use this metabolomics data deliverables checklist to write SOW language that protects timelines, enables audits, and prevents rework.

What "deliverables" mean when you outsource metabolomics

Deliverables are a complete, organized file bundle that lets your team and auditors retrace every step, re-run analyses, and validate findings months later. In metabolomics, that means raw vendor files plus open formats for reprocessing, an instrument method and run-order table that map SampleID to InjectionID, feature tables with a clear field dictionary, identification evidence with confidence levels, a QC report that documents diagnostics (not just summary stats), any pathway or network outputs with reproducibility metadata, and an analysis manifest that captures software, versions, and parameters. Get this right, and retesting, merge-analysis, audit queries, and publication move faster.

The baseline metabolomics data deliverables checklist you should request (minimum)

For any LC–MS metabolomics project, a minimal, audit-ready package includes vendor raw files, open-format mzML (if agreed), instrument method files, a run-order/sequence table, a feature table CSV with a field dictionary, sample metadata (minimum fields), an identification summary with evidence references, a QC mini-report with required diagnostics, and an analysis manifest with versions and parameters. Missing any required item increases rework risk and jeopardizes reproducibility. Light CTA: If your team wants to sanity-check scope before kickoff, ask for a "sample deliverables pack" to compare against this checklist.

We do not provide a downloadable template pack. Instead, below are concise, copy‑pasteable example templates and a short validation snippet you can adapt and include in your SOW or hand to vendors. These examples are illustrative—adjust names, required columns, data types, and parameter values to match your project.

1. Field-dictionary (CSV header + example rows)

field_name,description,required/recommended,data_type,allowed_valuesfeature_id,Unique feature identifier (row-level),required,string,mz,Consensus m/z value required,float,rt,Consensus retention time (s),required,float,intensity_sampleX,Peak area for sample X,required,float,qc_flag,QC processing flag (e.g., PASS/FAIL),recommended,string,PASS|FAIL|REVIEW

2. Run-order / sequence (CSV header + example row)

sample_id,injection_id,filename,class_groupSMP001,INJ0001,SMP001_GroupA_Run1.raw,Study

3. Example analysis-manifest (YAML snippet)

manifest_version: 1.0created_by: Analyst Initialscreated_date: 2025-12-01software:

- name: MSConvertversion: 3.0.22000step: vendor-to-mzML conversion

- name: MZmineversion: 2.53step: peak-picking and alignmentdatabases:

- name: HMDBversion: 4.0

- name: GNPS_MSPversion: 2024-11-01parameters: peak_picking:mz_tolerance_ppm: 10rt_tolerance_sec: 30 alignment:gap_extend_rt_sec: 5normalization: method: probabilistic_quotient settings: {}batch_correction: method: sva settings: {num_sv: 2}notes: "Adjust software names/versions and parameter keys to match your pipeline."

4. Short illustrative Python validation script (pseudo-production; adapt before use)

from csv import DictReaderREQUIRED_FEATURE_COLS = ['feature_id','mz','rt']REQUIRED_RUN_COLS = ['sample_id','injection_id','filename']

load headers (fast check)

def check_headers(path, required): with open(path) as f: headers = next(DictReader(f)).keys() missing = [c for c in required if c not in headers] return missing

sample consistency check

feature_missing = check_headers('feature_table.csv', REQUIRED_FEATURE_COLS)run_missing = check_headers('run_order.csv', REQUIRED_RUN_COLS)if feature_missing or run_missing: print('HEADER FAIL', feature_missing, run_missing)else: print('Headers present — proceed to content checks')

simple filename extension check (example)

with open('run_order.csv') as f: for r in DictReader(f): if not r['filename'].lower().endswith(('.raw','.wiff','.d')): print('Invalid filename extension for', r['sample_id'])

cross-check SampleID exists in feature table (illustrative; scalable implementations should use pandas)

Each example above is intentionally compact so you can paste it into an SOW or attach to a vendor RFP. For production use, expand validation scripts (use pandas or a CI check), formalize data types, and store manifests in machine-readable YAML/JSON alongside checksums for large raw files.

Metabolomics data deliverables checklist for any metabolomic profiling service—what to request in your SOW.

Metabolomics data deliverables checklist for any metabolomic profiling service—what to request in your SOW.

Raw data deliverables: vendor files, mzML (if agreed), and naming rules

You'll need both the original vendor raw files (e.g., Thermo .RAW, AB SCIEX .wiff, Agilent .d) and, if specified, their open-format conversions (mzML 1.1.0) to ensure interoperability and reprocessing in tools like OpenMS, MZmine, or MS-DIAL. The HUPO-PSI mzML format encodes spectra and essential acquisition metadata using controlled vocabularies, which supports downstream reproducibility; see the specification and background in the HUPO-PSI mzML repository and the 2010 overview by Martens et al. in Proteomics (2010).

Require the instrument method files used (LC gradient, MS acquisition settings) and a run-order table that maps SampleID ↔ InjectionID ↔ FileName and shows where blanks and pooled QCs appear. Enforce machine-friendly naming rules, for example: SampleID_Group_RunBatch_InjectionID.raw and matching .mzML filenames. This prevents "data not matching" issues when merging or auditing. For practical LC–MS setup context, see our documentation-style overview in LC–MS setup for untargeted metabolomics.

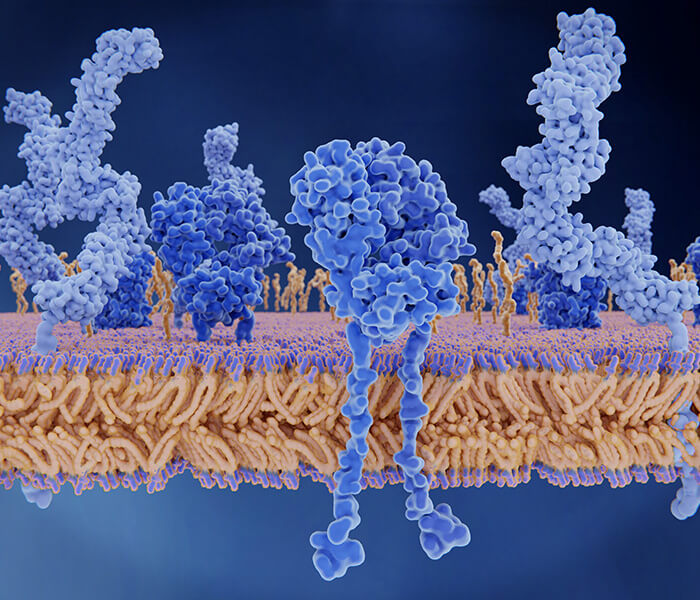

Raw LC–MS data deliverables: vendor raw plus mzML (if agreed) with sample-to-injection traceability.

Raw LC–MS data deliverables: vendor raw plus mzML (if agreed) with sample-to-injection traceability.

Feature table deliverables: required columns, flags, and a field dictionary

A reusable feature table combines core identifiers, per-sample quantitation, annotations, and QC/processing flags. At minimum, request: feature_id, m/z (mz), retention time (rt), intensity/area per sample, and sample_id. Recommended additions: adduct, isotope, batch_id, missing_rate, and qc_flag. Insist on a field dictionary (field name, description, required/recommended, data type, and allowed values where relevant). Without it, your downstream bioinformatics team must guess column meanings—leading to errors and delays. For standardized exchange, consider adding an mzTab-M export that links features, per-sample abundances, and MS/MS references; see the GNPS description of feature-based molecular networking with mzTab-M.

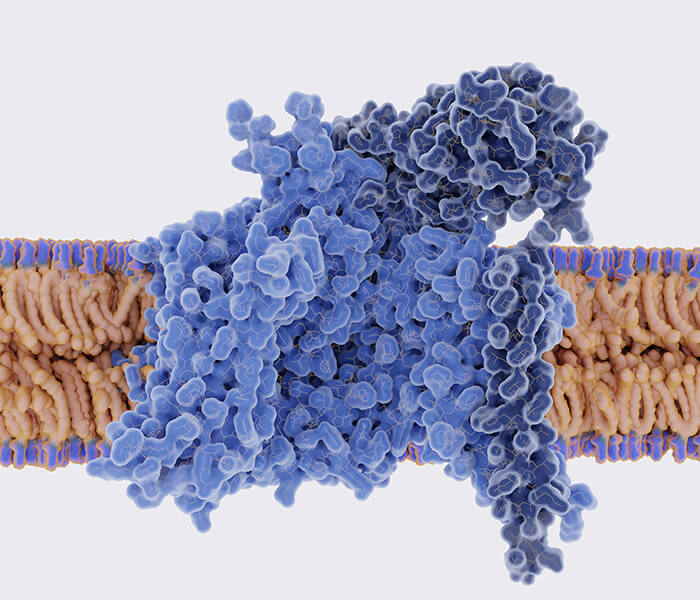

A reusable feature table needs a field dictionary—critical for downstream bioinformatics and audit-ready reporting.

A reusable feature table needs a field dictionary—critical for downstream bioinformatics and audit-ready reporting.

Sample metadata deliverables: minimum fields for traceability and reanalysis

Traceability depends on simple, consistent metadata. Require at least: sample_id, matrix/organism part, group/arm, extraction_batch, run_batch, injection_order, plate_id, method_version, operator, and notes. The assay-level record should link samples to raw file names, instrument, and method descriptors, preserving randomization and run-order context. Aligning with ISA-Tab conventions used by MetaboLights improves FAIRness and auditability; see the MetaboLights guides (2024) and the 2024 database overview by Yurekten et al. in Nucleic Acids Research.

Minimum sample metadata fields keep metabolomics deliverables traceable, reproducible, and audit-ready.

Minimum sample metadata fields keep metabolomics deliverables traceable, reproducible, and audit-ready.

Identification deliverables: confidence levels, evidence types, and what's realistic

Identification confidence should be explicit for each reported metabolite. A practical ladder is: Unknown feature → Putative annotation → MS/MS-supported match → Confirmed with a reference standard measured under the same conditions. Report the evidence behind each call: accurate m/z, retention time, MS/MS spectra, spectral library name/version and match score, and whether an authentic standard was run. Community practice follows guidance that Level 1 confirmation involves matching multiple orthogonal properties against an authentic standard; see Salek's overview of MSI reporting in Metabolomics Standards (2013) and a 2023 Anal Chem perspective on evidence-based identification in Analytical Chemistry (2023).

Standards alignment: this article follows community reporting conventions—MSI identification confidence levels (MSI/Salek et al., see MSI reporting guidance, Salek et al. 2013); HUPO‑PSI mzML (mzML 1.1.0 spec and repo; HUPO‑PSI mzML and Martens et al. overview); ISA‑Tab metadata conventions used by MetaboLights (EMBL‑EBI MetaboLights guides); and pathway DB citation best practices—cite Reactome release or KEGG API release date when reporting results (Reactome NAR; KEGG REST). These anchors improve reproducibility and auditability.

Metabolite identification deliverables should include confidence levels and evidence, not just names.

Metabolite identification deliverables should include confidence levels and evidence, not just names.

QC report deliverables: what a complete QC pack should include

Avoid prescribing universal numeric thresholds; instead, require the following diagnostics and a short narrative of how they were used. Reviews emphasize thorough diagnostic disclosure and run-order context to interpret stability and batch effects; see Broeckling et al. in Analytical Chemistry (2023).

- Pooled QC drift across run order (intensity or RT drift visualization).

- PCA (or similar) showing QCs cluster tightly, indicating stable acquisition and processing.

- Blank checks summarizing background features and how they were handled.

- Missingness overview per feature and per sample, with handling approach noted.

- Repeatability overview and a rerun/exception log (what was repeated, why, when, and outcomes).

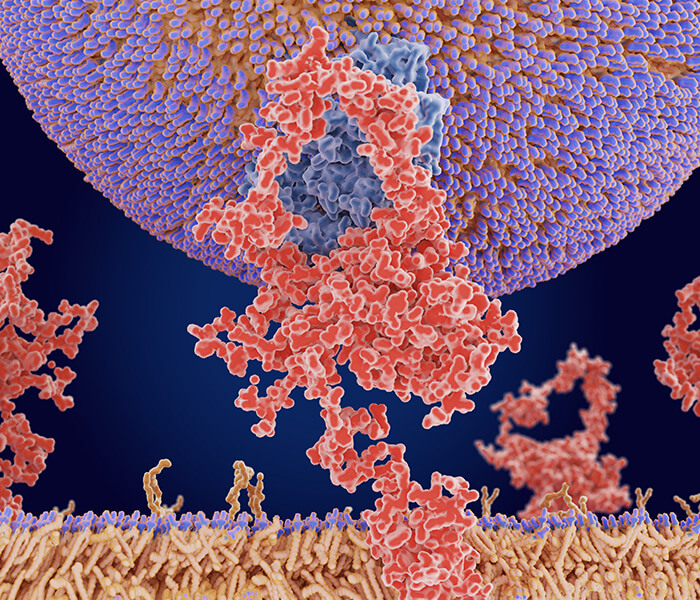

QC report essentials for a metabolomics analysis service: drift diagnostics, QC clustering, blanks, and missingness.

QC report essentials for a metabolomics analysis service: drift diagnostics, QC clustering, blanks, and missingness.

Pathway and network deliverables (scope-dependent): what to ask for and how to reproduce

Pathway or network outputs add value only when IDs map reliably. If included in scope, require a reproducibility packet with: the input feature/ID list, an ID mapping table (which identifier scheme was used), enrichment parameters and background set, the database name and version (e.g., KEGG/Reactome release), and exportable outputs (hit tables, plots, and a network file like GraphML). Reproducible pathway reporting stresses explicit identifier mapping, background sets, parameter disclosure, and database version citation so results can be re-run later; see Wieder et al. in PLOS Computational Biology (2021).

Pathway outputs are most useful when IDs map well—always deliver mapping tables, parameters, and database versions.

Pathway outputs are most useful when IDs map well—always deliver mapping tables, parameters, and database versions.

Versioning and reproducibility: analysis manifest, software versions, and databases

An analysis manifest is the simplest way to make results reproducible. It lists the software and OS versions, libraries/databases (HMDB, KEGG, Reactome, ChEBI, LIPID MAPS; spectral libraries like NIST or GNPS), key parameters (peak picking settings, alignment tolerances), normalization method, batch correction approach and settings, filtering rules, date/time, and analyst initials.

An analysis manifest makes your metabolomic profiling service deliverables reproducible and audit-ready.

An analysis manifest makes your metabolomic profiling service deliverables reproducible and audit-ready.

Example practice (neutral): Disclosure: Creative Proteomics is our product. In manifest examples we provide to clients, the sheet includes software names and versions for each step, database releases and dates, normalization and batch-correction settings, and explicit filtering rules. The goal is transparency so reprocessing teams can reproduce results without guesswork.

Example analysis manifest (YAML)

manifest_version: "1.0" generated_on: "2025-12-01T15:32:00Z" # ISO 8601 timestamp for audit trail analyst: "A. Scientist" # person responsible for run software:

- name: MSConvert version: "3.0.22000" step: "vendor-to-mzML conversion"

- name: MZmine version: "2.53" step: "peak-picking and alignment" databases:

- name: HMDB version: "4.0"

- name: GNPS_MSP version: "2024-11-01" parameters: peak_picking: mz_tolerance_ppm: 10 rt_tolerance_sec: 30 alignment: gap_extend_rt_sec: 5 normalization: method: "probabilistic_quotient" filtering_rules: min_peak_intensity: 1000 # absolute intensity units min_samples_present: 0.2 # fraction of samples where feature must appear batch_correction: method: "sva" settings: num_sv: 2 checksums: raw_index: "checksums/raw_files.sha256" # path to checksum file for raw data review: approved_by: "Q. Reviewer" approved_on: "2025-12-03"

Why this helps (validation checkpoints and reproducibility)

- Key validation checkpoints: confirm checksums match raw files, verify software versions and parameter keys, and ensure sample IDs in feature tables match the run-order table.

- Reproducibility value: a machine-readable manifest records the exact environment and rules needed to re-run preprocessing and annotation steps without guesswork—critical for audits, merges, and downstream reanalysis.

Audit-ready acceptance criteria you can paste into your SOW

Use pass/fail language tied to completeness, traceability, and reproducibility. Completeness means vendor raw files and (if agreed) mzML are delivered; instrument method files and a run-order table mapping SampleID ↔ InjectionID ↔ FileName are present; feature table CSV plus field dictionary; sample metadata (minimum fields); identification summary with evidence references; QC report with required diagnostics; analysis manifest with versions/parameters; pathway reproducibility packet (if in scope). Traceability requires consistent naming linking sample to injection and raw/mzML filenames, sample_id values in the feature table that match the sample metadata, identification reports that cite library names/versions, pathway mappings and outputs that cite database names/versions, and QC plots that include run-order context. Reproducibility requires a manifest with software/OS/database versions, documented parameters and filtering rules, pathway analysis with background set and parameter values, and validated mzML conversions against vendor raw.

Common deliverable gaps (and how to prevent them before kickoff)

The gaps we see most often are missing field dictionaries, incomplete sample metadata, unlinked injections (no sequence table), undocumented library or database versions, QC plots without run-order context, and pathway outputs without mapping tables or parameter disclosure. Prevent them by assigning SampleID ownership and naming conventions, requiring mzML alongside vendor raw, agreeing on acceptable identification confidence levels and the evidence files to deliver, specifying QC diagnostics plus a rerun/exception log, mandating a pathway reproducibility packet when pathway analysis is in scope, storing instrument method files with a run-order table that maps SampleID to InjectionID and FileName, documenting normalization and batch correction methods with parameter values in the manifest, and using secure transfer methods (e.g., SFTP/Aspera) with appropriate handling of sensitive metadata.

Request a sample deliverables pack (and compare it to your checklist)

Before you issue a PO, request a sample deliverables pack and compare it line-by-line against your checklist. This single step catches gaps early and aligns expectations about files, fields, and evidence.

- Minimal: vendor raw + mzML, run-order/sequence table linking SampleID→InjectionID→FileName, feature table field dictionary, concise QC summary, and analysis manifest.

- Standard: everything in Minimal plus identification evidence (MS/MS spectra, library name/version, match scores), a complete QC pack (plots, rerun log), and full parameter/exports package.

- Publication-ready: everything in Standard plus signed version log/checksums, ID mapping tables, and a reproducibility report (commands, DB versions, workflows).

Request a sample deliverables pack — see our Metabolomics Services page for context and examples: Metabolomics Services.

About the authors

CAIMEI LI Senior Scientist at Creative Proteomics. Professional profile: see her LinkedIn profile for background and contact: Caimei Li on LinkedIn.