A design-to-validation blueprint: platform, statistics, and reproducible interpretation for research use only.

Metabolomics can turn noisy signals into decision-grade evidence—when the study is designed for auditability from day one. In this metabolomics biomarker case study, we use an oncology example to show how a metabolomics analysis service and a disciplined untargeted metabolomics workflow can move from discovery to an independent validation cohort, with targeted confirmation and reproducible metabolomics analysis that holds up under scrutiny.

Key takeaways

- The fastest path from discovery to clinical relevance is an evidence chain: untargeted profiling → prioritized candidates → independent validation cohort → targeted LC–MS/MS confirmation.

- Study design prevents "false biomarkers" before statistics: consistent sampling windows, matched confounders, and an explicit batch/site plan.

- Trustworthy LC–MS data depends on documented QC: pooled QCs, blanks, drift monitoring, carryover checks, and traceable metadata with acceptance thresholds.

- Statistics must avoid leakage and overfitting: handle missingness by mechanism, correct batch effects within CV folds, and control FDR.

- Validation design outranks any single ROC: independent cohort replication and, where possible, targeted quantification for key metabolites.

Executive snapshot: metabolomics biomarker case study at a glance

Question: Can plasma metabolites support early risk stratification in neuroblastoma at diagnosis?

Cohorts: Untargeted LC–HRMS discovery cohort; independent validation cohort with targeted LC–MS/MS quantification of the lead metabolite.

Platform and analytics: LC–HRMS for discovery; candidate selection via standard univariate and multivariate screening; survival analysis for clinical signal.

Validation: Independent cohort confirmation and targeted quant of 3‑O‑methyldopa (3‑O‑MD) with concentration ranges reported and poorer-prognosis association replicated in high-risk patients.

Why it mattered: The study demonstrated a defensible, auditable route from untargeted discovery to decision-relevant evidence for risk stratification at diagnosis.

Source: Frontiers in Oncology (2022), Untargeted LC‑HRMS plasma metabolomics revealing 3‑O‑methyldopa as a prognostic signal in high‑risk neuroblastoma, DOI 10.3389/fonc.2022.845936. Read the open-access full text in the Frontiers in Oncology article and on PMC (2022).

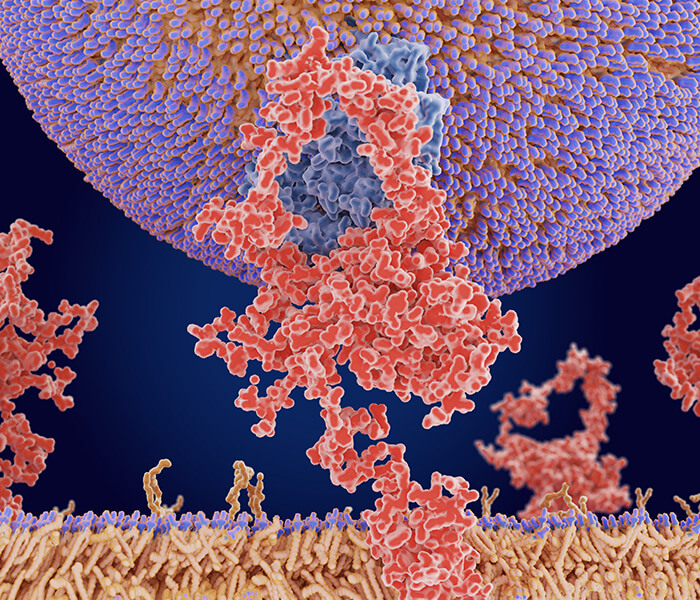

Workflow blueprint for biomarker discovery using a metabolomics analysis service approach (illustrative schematic).

Workflow blueprint for biomarker discovery using a metabolomics analysis service approach (illustrative schematic).

The translational question and decision at stake

Reframed for practice: At the time of diagnosis, could a plasma biomarker help stratify neuroblastoma patients into risk categories that inform prognostic assessment and downstream trial design? The minimum viable output was a candidate with independent cohort replication and a targeted LC–MS/MS method reporting a usable concentration range. For actual deployment, teams would further define threshold logic, analytic sensitivity, and documentation aligned with research-use-only decision support.

Study design that prevented false biomarkers

An honest biomarker pipeline begins with cohort logic. The anchor study framed inclusion around newly diagnosed neuroblastoma patients with clear clinical staging and age stratification, aiming to avoid heterogeneity that blurs signals. Matching and confounder control focused on variables like age at diagnosis and metastatic status, with sample collection windows standardized to minimize pre-analytical shifts. Critically, the design recognized batch and site structure. Samples were balanced across runs, and the analysis plan anticipated batch diagnostics and correction rather than retrofitting fixes after the fact. By constraining variability upstream, the study reduced the chance that a "biomarker" was a batch artifact or a demographic proxy.

Platform and QC that made measurements trustworthy

In untargeted LC–MS, trust is earned through transparent QC. Community guidance emphasizes pooled QC samples to condition the system and monitor precision, process/extraction blanks to flag contamination and carryover, and drift monitoring across the run with documented acceptance criteria. Pooled‑QC‑based normalization approaches and RSD filtering help retain stable features; drift plots and QC PCA provide line-of-sight into performance. Reporting discipline matters: consistent disclosure of QC frequency, thresholds, and decisions improves comparability and audit readiness.

- Pooled QC programs and precision assessment are synthesised in the Broeckling et al. 2023 scoping review.

- Normalization and drift control practices are summarized in Dunn et al. 2023 workshop guidance.

- mQACC minimal reporting recommendations consolidate contamination controls and documentation in Mosley et al. 2024.

Statistics beyond significance and how candidates were prioritized

The analytics pipeline matters as much as the instrument. Preprocessing starts with feature filtering on coverage and quality, clear missingness rules distinguishing MNAR from technical non-detects, and conservative imputation applied only within training folds. Batch effects are diagnosed with QC-informed visualization and corrected with methods that reside inside the model's cross-validation loop to prevent leakage. Univariate screening (with FDR control) triangulates with interpretable multivariate models. Hyperparameters are tuned in nested cross-validation, and all transformations, filters, and selections are logged for reproducibility.

Two non-negotiables guide defensible modeling:

- Leakage prevention: Every step—normalization, batch correction, imputation, and feature selection—must be fit only on the training partition for each fold. Evaluate on untouched validation partitions. For guardrails, see the comprehensive Nature Protocols workflow by Alseekh et al., 2021.

- Multiple testing control: Use Benjamini–Hochberg FDR for discovery-scale inference; escalate to more stringent corrections when the intended use demands it. Practical preprocessing and missingness principles are consolidated in Chen et al., 2022.

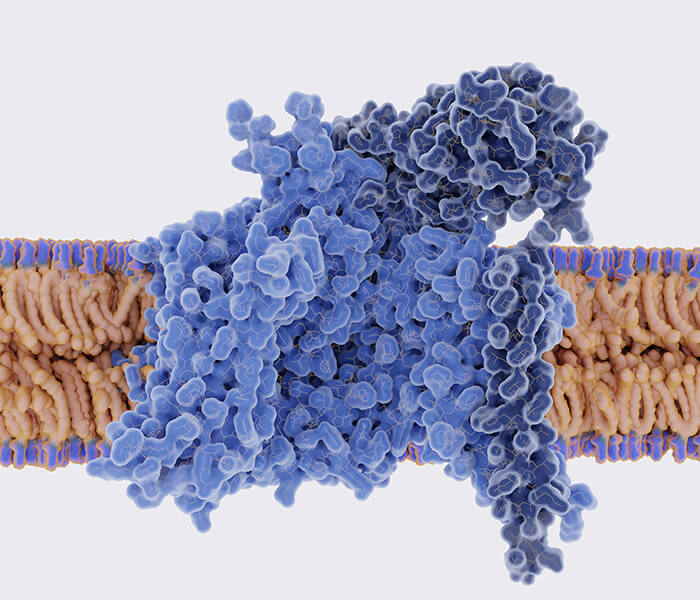

In biomarker discovery, validation evidence matters more than a single headline metric (illustrative schematic).

In biomarker discovery, validation evidence matters more than a single headline metric (illustrative schematic).

Validation strategy and what counted as real

Validation is layered by design. Technical stability comes first, supported by QC diagnostics and precision metrics. Internal resampling checks (bootstrap and permutation) test robustness of the signal and the model. Most importantly, an independent validation cohort replicates the association. Where feasible, targeted LC–MS/MS converts a putative finding into a concentration with an authentic standard.

In the anchor neuroblastoma case, the lead metabolite 3‑O‑methyldopa was quantified by targeted LC–MS/MS in an independent cohort, with concentrations spanning tens to thousands of ng/mL and higher levels associated with poorer prognosis among high‑risk patients. This structure—discovery, independent validation, and targeted confirmation with clinical stratification—made the evidence chain audit-friendly and decision-relevant without over-reliance on a single ROC curve. For open-access details on the discovery-to-validation design and targeted confirmation, see Frontiers in Oncology (2022), DOI 10.3389/fonc.2022.845936.

Biological interpretation with guardrails

Interpretation should elevate mechanism without outrunning the data. Here, 3‑O‑methyldopa sits within catecholamine metabolism, a pathway already relevant to neuroblastoma biology. That context helps explain the observed associations while keeping claims disciplined: many untargeted features remain putative, some remain unmapped, and isomeric ambiguity is common without authentic standards. Teams should report MSI identification levels per feature, library and database versions, and mapping coverage so downstream readers can judge confidence and transferability. For consensus on identification confidence and reporting, see the Metabolomics Standards Initiative guidance in Nature Protocols and complementary community documents such as Chen et al., 2022.

Pathway analysis in metabolomics is strongest when mapping coverage, assumptions, and database versions are reported (illustrative schematic).

Pathway analysis in metabolomics is strongest when mapping coverage, assumptions, and database versions are reported (illustrative schematic).

What analysis output examples should look like

Before choosing a partner, ask to review vendor-neutral outputs that you can drop straight into your R&D workflow. Minimum outputs include a candidate table with identification level and MS/MS evidence fields, a QC diagnostics summary showing drift and missingness decisions with rationales, and a mapping table that records database and library versions used for annotation. Recommended outputs include a concise model report that documents sensitivity analyses and assumptions, plus a pathway summary that states mapping coverage and limitations. Scope-dependent extras may include network modules with stability checks, multi-omics joins for matched samples, and a targeted verification plan with assay-level details.

Providers like this can support untargeted discovery and targeted confirmation in oncology while delivering audit-ready exports that match the outputs described above. For a sense of the untargeted discovery flow, see this neutral overview of an untargeted metabolomics service.

Reproducibility checklist to keep the study auditable

The simplest way to prevent rework is to ship an explicit reproducibility pack with every study. Use this checklist as your baseline and expand as needed by scope.

- Parameter log with every transformation, threshold, and model setting

- Software versions for acquisition and analysis, including OS and package hashes

- Database and library versions used for identification and mapping

- Mapping tables linking raw features to IDs, adducts, and evidence scores

- Sample-to-file traceability with a complete run-order map

- QC diagnostics summary including drift plots and acceptance thresholds

- Random seeds for resampling procedures, if applicable

- A short readme that explains folder structure and how to rerun key steps

A reproducibility pack makes biomarker discovery results auditable and easier to reuse for research use only.

A reproducibility pack makes biomarker discovery results auditable and easier to reuse for research use only.

How to apply this blueprint to oncology projects

Pilot phase: Stress-test pre-analytics on a small, representative set. Confirm that pooled QCs stabilize the system, blanks are clean, and drift is within acceptance thresholds. Dry-run the candidate table schema and mapping tables so nothing is "to be decided" after acquisition.

Discovery phase: Balance cohorts across batches and lock your leakage-proof analysis plan. Declare missingness rules and batch correction methods before looking at outcomes. Separate MSI identification levels in outputs and modeling.

Validation phase: Design the independent cohort early, not after discovery. Pre-register the targeted LC–MS/MS assay for top hits with authentic standards and a clear concentration range and LoQ. Define how replication will be judged and what constitutes success.

Procurement and RFP questions you can ask today:

- How will you demonstrate that batch correction, imputation, and feature selection are contained within each cross-validation fold?

- What pooled QC frequency and acceptance thresholds will you use, and how will you document precision and drift?

- Which identification levels (MSI) will be reported per feature, and how will MS/MS evidence be shared?

- What is your independent validation plan and targeted quantification strategy for shortlisted candidates?

- How do you provide sample-to-file traceability and a complete parameter log with software versions?

- What are your missingness rules, and how do you distinguish MNAR from technical non-detects?

- Which databases and versions will be used for mapping, and how will updates be documented?

- Can you provide a de-identified example of the candidate table, QC diagnostics summary, and model report?

For oncology projects using plasma or serum, you may also want to review sample-type nuances outlined in this overview of a serum metabolomics analysis service.

Request analysis output examples

Before you commit, align expectations by reviewing the exact outputs you will receive—candidate tables with identification levels, QC diagnostics with decisions and thresholds, mapping tables with versioning, and a compact model report.

Request analysis output examples

By Caimei Li, Senior Scientist at Creative Proteomics

LinkedIn: https://www.linkedin.com/in/caimei-li-42843b88/

We translate research-use-only metabolomics studies into practical, audit-friendly workflows—covering study design, QC, statistics, validation logic, and interpretation-ready outputs for biomarker discovery teams. Last updated: January 2026

Further reading