Developments in Proteomics Technology

Mass Spectrometry-based Proteomics

Mass spectrometry-based proteomics is a dynamic and pivotal field within the realm of life sciences, employing a diverse array of analytical techniques categorized into low- and high-throughput methodologies. Over the past few decades, mass spectrometry (MS) has emerged as the preeminent "gold standard" for high-throughput analysis in proteomic research.

Historically, a critical challenge faced by MS-based proteomics was the delicate balance between instrument sensitivity and specificity. However, recent years have witnessed a transformative shift in the mass spectrometry landscape. Instrument suppliers have introduced cutting-edge technologies characterized by unprecedented speed, sensitivity, and specificity capabilities.

Dr. Harvey Johnston, a postdoctoral research scientist in the Rahul Samant group at the Babraham Institute, University of Cambridge, notes the remarkable evolution in mass spectrometer sensitivity. Advances in liquid chromatography coupled with mass spectrometry (LC-MS) have further propelled the capabilities of proteomic workflows. Notably, what was once a limitation – the ability to describe only a few hundred of the most abundant proteins – has evolved. Today, proteomics researchers can identify thousands of proteins in a relatively fast and efficient experimental setup.

At the forefront of this revolution is Professor Matthias Mann, a globally renowned scientist serving as the research director of the Proteomics Program at the Novo Nordisk Foundation Center for Protein Research and director of the Max Planck Institute of Biochemistry in Munich. Mann identifies a particular breakthrough in MS proteomics led by the Aebersold lab—the transition towards Data-Independent Acquisition (DIA).

DIA, also known as Sequential Window Acquisition of All Theoretical Mass Spectra (SWATH-MS), represents a significant advancement over its counterpart, Data-Dependent Analysis (DDA). Unlike DDA, DIA segments all precursor ions generated in the first cycle (MS1) during the second cycle (MS2), providing unbiased analysis, broader proteome coverage, and increased repeatability.

The application of DIA-based MS in proteomic research has experienced substantial growth, particularly in oncology. In 2019, 42 published studies focused on various cancer types, utilizing DIA-MS for proteomic analysis. The impact of DIA extends to neuroscience proteomics, where it has contributed to the discovery of new information related to diseases such as Alzheimer's.

The ongoing exploration into "ultra-fast" proteomics, aimed at accelerating DIA-based MS, recently identified and confirmed 43 novel plasma protein biomarkers, including 11 indicative of the severity of COVID-19.

According to Johnston, DIA-MS is playing a crucial role in standardizing proteomic workflows. The approach is contributing to minimizing discrepancies in results obtained from different proteomics labs, fostering a more consistent and reliable landscape in the evolving field of mass spectrometry-based proteomics.

Select Services

Advancements in Aptamer-Based Proteomics

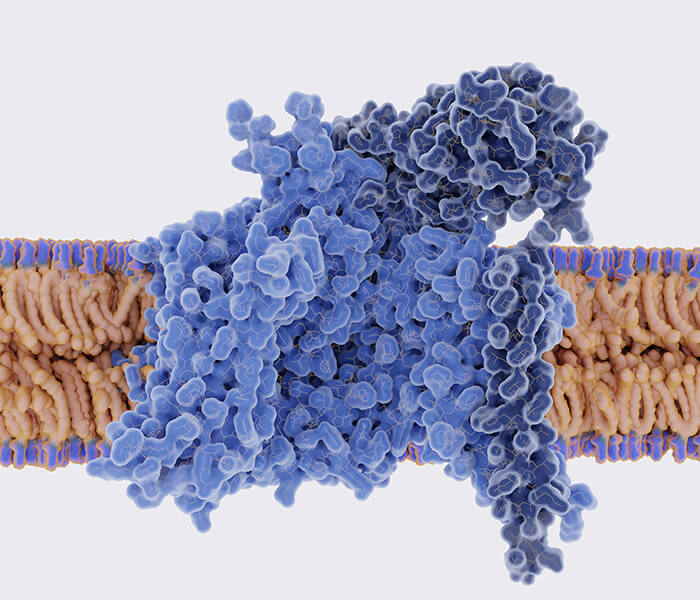

In the ever-evolving landscape of proteomics, a paradigm shift has emerged with the rise of "second-generation" proteomics platforms, prominently featuring aptamer-based technologies over traditional antibody-centric approaches. While mass spectrometry (MS) has long reigned supreme in proteomic studies, the recent surge in aptamer-based methodologies is reshaping the way we explore the proteome.

Aptamers, short single-stranded DNA molecules, offer a unique advantage in their ability to adopt specific conformations, enabling selective binding to biological targets, notably proteins. This departure from traditional antibody methods brings a new level of specificity and selectivity to the field, particularly beneficial in areas where the dynamic range of MS proteomics proves limiting, such as biomarker discovery.

Dr. Benjamin Orsburn of the Johns Hopkins University School of Medicine highlights this transformative shift, stating, "While LC-MS has monopolized proteomics for decades, this is evidently no longer the case." The aptamer approach acts akin to a molecular magnet, providing an alternative to the exhaustive "needle in a haystack" analysis performed by MS. This is particularly crucial when dealing with low-abundance biomarkers, where aptamer technology showcases its prowess.

Recent studies utilizing aptamer-based proteomics have yielded groundbreaking insights. For instance, in the realm of non-alcoholic fatty liver disease (NAFLD), aptamer-based technologies identified protein-based characteristics of fibrosis, a significant advancement in understanding one of the leading global causes of liver diseases. Similarly, aptamer-based proteomics has been instrumental in identifying biomarkers associated with cardiac remodeling and heart failure events, demonstrating its versatility across diverse biological contexts.

Aptamer technology appears to be less influenced by absolute protein copy numbers within cells compared to traditional MS techniques. While MS proteomics remains a preferred method for broader proteome identification, aptamer-based approaches serve as complementary tools, filling the gaps and offering a more nuanced understanding of specific protein interactions and dynamics.

As we venture further into the era of aptamer-based proteomics, the promise of a more targeted, selective, and efficient exploration of the proteome unfolds. While challenges remain, the continuous development of this technology holds the potential to revolutionize our approach to studying proteins, bringing us closer to unraveling the intricacies of cellular processes and disease mechanisms.

Artificial Intelligence Revolutionizing Protein Research

AI in Drug Discovery and Protein Interaction

The application of artificial intelligence (AI) in proteomics has profoundly transformed the landscape of drug discovery. Understanding how and why specific proteins interact is crucial for advancing cell biology, developing new drugs, and determining the mechanisms behind therapeutic effects and side effects. According to Dr. Octavian-Eugen Ganea from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL), manual exploration of protein interactions without AI assistance would be an extremely time-consuming process.

EquiDock: A Breakthrough in Protein Docking

The integration of AI into protein docking methods has led to groundbreaking advancements. The EquiDock model, developed by the Ganea team at MIT, utilizes the 3D structures of two proteins to directly identify regions where interactions may occur. With the ability to capture complex docking patterns from around 41,000 protein structures, EquiDock's geometric constraints model, featuring thousands of parameters dynamically adjusting during computation, outpaces existing software. The model can predict protein complexes in one to five seconds, exhibiting speeds 80 to 500 times faster than current software. This rapid computation is vital for efficiently scanning potential drug side effects and reducing search scopes.

AI in Mass Spectrometry and Beyond

AI is aiding researchers in extracting more insights from the data obtained through mass spectrometry experiments, a process known for its time-consuming nature and the possibility of errors in protein identification. Deep learning methods, such as Prosit, DeepMass, and DeepDIA, have been developed to predict spectra and peptide characteristics. This is expected to optimize data-independent acquisition (DIA) methods, steering the field of proteomics towards more efficient and accurate directions.

AI in Non-Mass Spectrometry Proteomics and DeepFRET

Non-mass spectrometry proteomics, crucial for understanding protein pathologies like Alzheimer's disease, benefits from AI assistance. Methods involving microscopy and Förster resonance energy transfer (FRET) are time-consuming and require substantial expertise for analyzing large datasets. Addressing this challenge, researchers led by Professor Nikos Hatzakis have created the DeepFRET model—a machine learning algorithm that automates the recognition of protein motion patterns. The future development of AI in proteomics demands adherence to standards, data reporting, and synchronized sharing across research groups, as suggested by the recent Data, Optimization, Models, Evaluation (DOME) recommendations for machine learning in proteomics and metabolomics.

The Broader Applications of Proteomics

The continuous progress in proteomics technology heralds a new era with far-reaching implications, mirroring the transformative impact of the "DNA revolution" on forensic science in the late 20th century. Glendon Parker, the pioneer of protein-based human identification technology and a Ph.D. graduate from the University of California, notes that while the current influence of proteomics on forensic science is somewhat constrained by technological, legal, financial, and cultural factors, the future holds promising prospects for broader applications.

Proteomics boasts unique inherent advantages, notably the stability of proteins compared to DNA. Proteins, akin to DNA, can encapsulate specific identity information. In instances where DNA degradation poses challenges, proteomics emerges as a valuable tool for identifying bodily fluids, determining gender and ethnicity, and estimating the approximate time of death using diverse samples such as muscles, bones, and decomposition fluids. Parker underscores that, despite historical challenges, the implementation of proteomics in forensic science is poised to undergo a significant transformation, potentially reshaping how forensic evidence is processed and analyzed.

Looking ahead, proteomics is expected to extend its impact beyond forensic science. While its current influence may be somewhat limited, the adoption and integration of proteomics into criminal investigations and prosecutions are anticipated to become pivotal drivers in the field. In the short term, proteomics can serve as a complementary technology to DNA analysis, particularly in cases where DNA alone may not offer conclusive evidence. As proteomics continues to evolve, its broader applications in diverse scientific domains hold the promise of revolutionizing our understanding of biological systems and disease mechanisms.

Challenges and Future Prospects of Proteomics

Challenges: Technical and Complexity

Instrumentation Limitations:

The progress of proteomics is intricately linked to advancements in instrumentation. However, existing technologies face limitations in terms of sensitivity, resolution, and dynamic range. Addressing these constraints is crucial for unlocking the full potential of proteomic analyses.

Data Complexity and Analysis:

The intricate nature of proteomic data poses a significant challenge. The vast amount of information generated, coupled with the dynamic nature of proteins, necessitates sophisticated computational tools for data analysis. Integration of multi-omics data and the development of robust algorithms are imperative for comprehensive insights.

Sample Preparation Variability:

Variability in sample preparation techniques across laboratories can introduce bias and hinder result reproducibility. Standardization of protocols and the establishment of quality control measures are essential to mitigate this challenge.

Future Prospects: Expanding Horizons

Advancements in Mass Spectrometry:

Ongoing innovations in mass spectrometry hold the key to addressing current limitations. Improvements in sensitivity, speed, and the ability to characterize post-translational modifications will broaden the scope of proteomic analyses.

Integration with Multi-Omics:

The future of proteomics lies in its seamless integration with other omics disciplines. Combining proteomic data with genomics, transcriptomics, and metabolomics will provide a holistic understanding of biological systems, paving the way for personalized medicine and precision biology.

Technological Convergence:

Convergence of technologies, such as artificial intelligence and machine learning, will revolutionize data interpretation and accelerate the identification of meaningful biological patterns. Smart algorithms will enhance the efficiency of proteomic analyses, making large-scale studies more feasible.

Standardization and Collaboration:

Establishing standardized protocols and fostering collaboration among research communities are paramount. Shared resources and data repositories will facilitate cross-validation of findings, ensuring the robustness and reproducibility of proteomic studies.